Optimization testing

Making their website perform better, improving it to increase visitors satisfaction and, ultimately, obtain higher conversion rates are certainly top priorities for all marketers. It is, unfortunately, a tedious and complex task, and can be nearly impossible if you do not have the proper tools, to measure the effect - positive or negative - of the changes to the Web site you have decided to update. Marketing Factory integrates the support tools that enable this kind of testing easily. And with precisely measured results and statistical data you can make data-driven decisions versus those based only on assumptions.

1. Optimization test definition

Optimization testing is, at its heart, the process of presenting different versions of a page (or a part of a page) to visitors in order to evaluate statistically which version provides the best results (or conversion rate) and user acceptance. Once the test is completed, the marketer can decide, based on precisely collected and measured data, which version provides the best results and then to use it.

To run a test, you need:

- A place inside a page where the optimization test will occur.

- A content or digital asset inside this place that will be your control variant.

- One or more additional variants.

- A clear goal that will be your measure of success (with each success being called a conversion):

- a. A form submission.

- b. A page visit.

- c. A download.

- d. A video played.

When it comes to optimization tests, most online marketing platforms are limited.

- Some of them limit the tests to certain types of content (i.e., teasers, banners, images). Marketing Factory allows you to create optimization tests on any type of content - even on forms, which can be very convenient when measuring the impact of adding or removing a particular form field.

- Most platforms do not allow the testing of different types of items at the same time. If you decide to run a test on a banner, they will let you create other banners as variants but nothing else. Marketing Factory lets you test more radical variants. For instance, you can display a simple Call To Action asset in variant A, while providing a form in variant B.

1.1 Vocabulary

In Marketing Factory, a test is called an optimization test.

To avoid any confusion with terms commonly used when working with a content management system (CMS), the different pieces of content or assets that will be served to visitors during an optimization experience are called variants.

Digital marketers have divided the optimization testing practice into three main types of tests: A/B testing, A/Z testing, multi-variant testing. Unfortunately, the definition for each type of test is not always consistent for all marketers and the way a given testing platform handles a particular type of test can differ greatly from another one, especially for multi-variant testing.

Accordingly, it is essential for you to understand what each type of test means when using Marketing Factory.

1.1.1 A/B testing

A/B testing divides incoming traffic between two variants and measures the results (or conversion rate) for each variant. A/B testing is easy to set up, and results are easy to interpret, but is best-used and most appropriate for small changes.

In the most rigorous methodology, only one precise factor is changed at a time (such as color, size, position, text, asset, etc.) between both variants to make the interpretation of the results easier. The problem is that testing only one dimension at a time takes time. It is a powerful but slow iterative process.

Often, A/B testing is used to try variants of text labeling, color or size on buttons and other call to actions, such as in the following examples:

Same content, same button, except for the button label:

Same content, same button label but a different button color:

A/B testing is very precise but, if you want to test multiple changes at a time, you will have to test each improvement one by one, which may take a significant amount of time until you are done.

1.1.2 A/Z testing

A/Z testing divides the traffic between three or more variants. It is an extension of A/B testing and the same pros and cons apply. The more variants you want to test, the longer the experience will have to run in order to get significant results. BUT you have less tests to run.

1.1.3 Multi-variant testing

Multi-variant testing consists of defining multiple variants for multiple experiences on a page at the same time and serves a version of the page based on a randomly generated combination between all the possibilities to visitors. As a consequence, multi-variant testing implies you will have high numbers of users engaged in a test to obtain credible results. Interpreting those results can be quite problematic for the marketer. Why a particular combination worked better than other combinations is often difficult to assess. Optimization testing is not only about finding the best experience but is also about understanding why this particular experience works better because it can drive further optimizations.

Marketing Factory allows you to do A/B and A/Z testing in multiple areas of the same page, but does not provide multi-variant testing analysis at this time

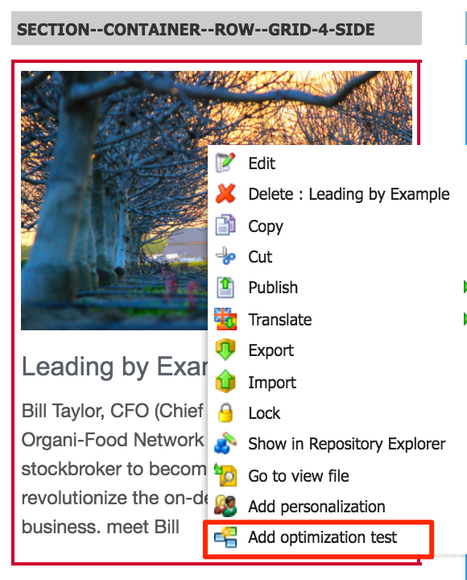

2. Creating a test on a CONTENT

To create an optimization test, follow this procedure:

- Go into any page of the Web site (powered by Digital Experience Manager).

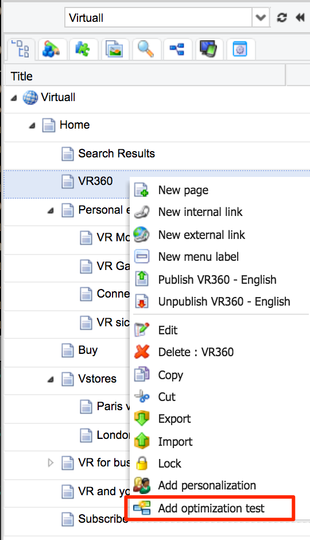

- Right click on the page on which you want to create an optimization test.

Click on Add optimization test.- Create or insert new content in the list.

Create new content:

Paste existing content:

Paste existing content as a reference:

- Open the settings dialog by clicking on the main button:

- In the dialog box, complete the following fields

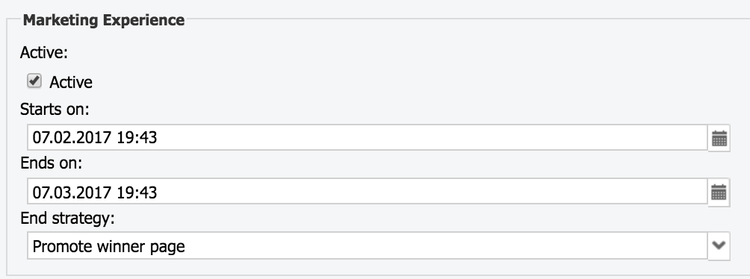

Define the goal to measure your success rate. As soon as a test has started, it is not possible to change the goal anymore; this ensures your results are valid. (Otherwise, the results could be completely false.)Field Usage System name Name of the test in the repository Active Checked by default. Uncheck to stop / pause the optimization test. Ajax rendering If youre experiencing troubles to display some of the variants it may be because of a JavaScript or css conflict. That situation can happen in particular with content coming from an external service and embedded in your page. Check the box to try an alternate type of rendering and see if it solves the issue. Starts on The personalization will start on that date. If the field remains empty, the personalization will start immediately (as soon as the page is published) Ends on The personalization will stop automatically at that date. If the field remains empty, the personalization will never, until a manual action. Maximum hits Maximum number of times the optimization test will be served to users before it stops. If you have two variants and 4,000 hits, then statistically each variant will be displayed 2,000 times (whatever the number of conversions) and will then stop the test. End strategy Lets you define what must happen when the test ends. There are two possibilities. First, Digital Experience Manager will automatically use the winning variant (the one with the highest conversion rate). Second, Digital Experience Manager will continue to serve the current control variant. Then it is up to you to decide manually to promote another variant. Control variant Lets you choose between all the variants as to which one should be the control - Save.

- Publish the area

3. Creating a test on a PAGE

To create an optimization test, follow this procedure:

- Go into any page of the Web site (powered by Digital Experience Manager).

- Right click on the page on which you want to create an optimization test.

- Click on Add optimization test.

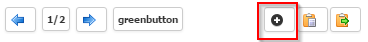

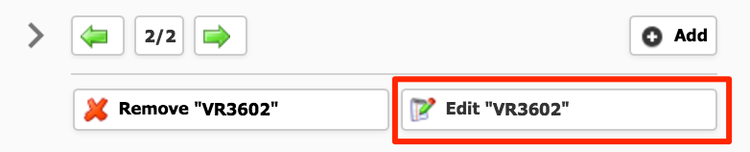

Add optimization test on a whole page - Create a new page from the dedicated toolbar that will appear top right :

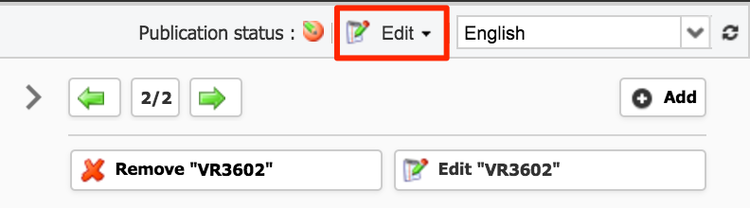

Please note that by default, the existing page is copied as the next variant so you can modify it instead of starting from scratch. - If you want to edit the current variant, you can use the toolbar:

- To open the settings dialog, go to the main page edit function, either through the left panel site tree or with the edit button that you can find top right :

The settings dialog is the same as the one available for content level optimization tests.

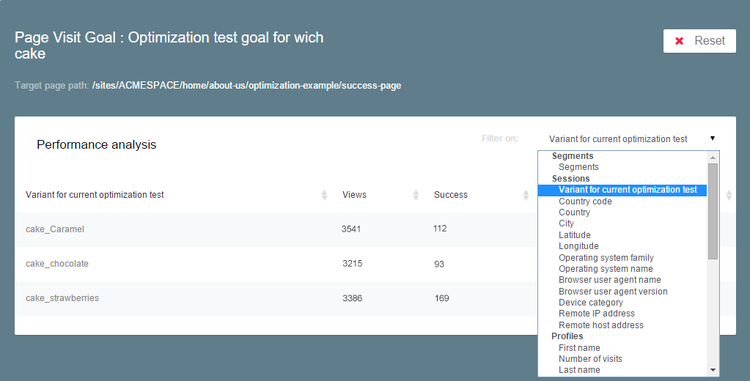

4. Test results

Once an optimization test has started, you can follow the results in-context.

- Go into the page containing your test

- Open the settings dialog by clicking on the main button:

- Click on the Goal tab

This tab does not provide the GUI to choose the goal of the test anymore (changing this goal in the middle of the test would lead to incoherent data) but displays the measured results instead.

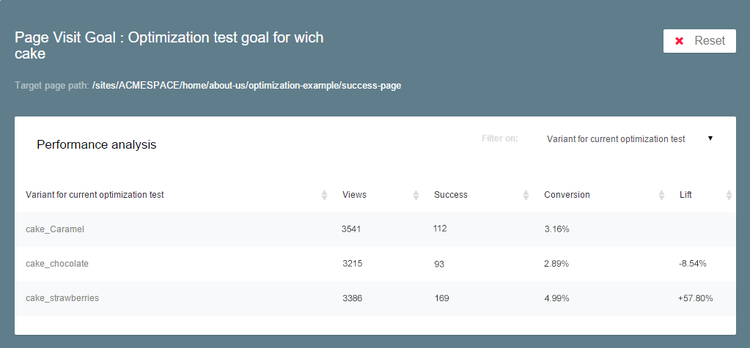

Results are displayed as a table

- Number of views: number of times the variant has been displayed

- Success: number of visitors having seen this variant who converted (achieved the goal)

- Conversion rate

- Lift: the % difference between your control variant and other test variants.

This first level of reporting allows you to easily identify which variant has the higher conversion rate and may increase performances if promoted as default variant when the test ends.

But in fact, reports work like all the other reports in Marketing Factory and allows marketers to break-down the results using the filtering options.

Thanks to that capability, marketers are not only able to know that a variant works better for the overall audience, but can see if another variant works perhaps much better for a subset of the audience. In that case, promoting the winner will improve the conversion rate, but creating a permanent personalization to serve the best variant to each segment of the audience for which it works the best can improve the results much higher!

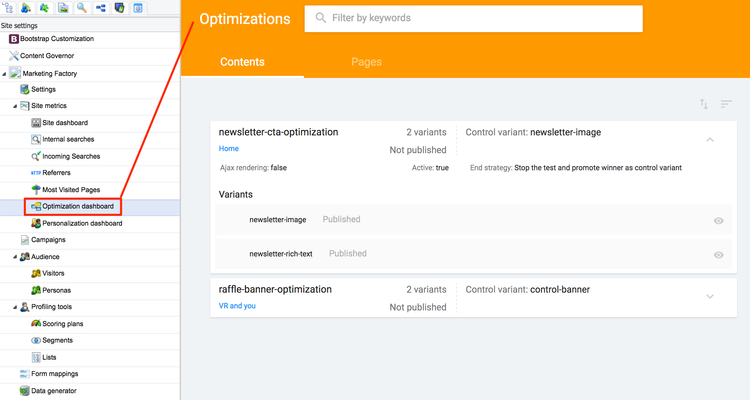

5. OPTIMIZATIONS DASHBOARD

Optimization dashboard can be found under site settings => Marketing Factory => Optimization dashboard

The dashboard has 2 tabs: one is listing the optimization tests done on contents and the other is listing the tests on whole pages.

The following information is displayed when the user expands a test. For the test itself:

- System name

- Number of variants

- A link to the page containing the test

- Publication status of the test

- Ajax rendering: true / false

- Start date

- End date

- Control variant name

- End strategy

- Type of the goal associated to the test

- Goal associated to the test (name of the form, name of page view, )

For each variant in the test:

- System name

- Publication status

- A preview button (Note: the preview doesnt include the css of the site and might not match the final rendering)

The textbox at the top of the page can help you finding the optimization test youre looking for. It will filter the current list based on all the fields that are displayed (either for the variant or for the tested area).

Testing is critical for marketers but it is a complex activity. Setting up a test is straightforward, thanks to Marketing Factorys ease of use. But to correctly define the test variants, produce them and then interpret the results is not a trivial job. Following are some recommendations to help you be successful with your testing.

Duration: The duration of your test is critical. Most sites do not have enough traffic to hit statistical significance in a day or two, because the test will not touch your entire audience in its full diversity. You should also run your test at least for 3 or 4 days to let your traffic normalize between all segments. Ideally, your test should run a full week, including weekdays and a weekend, because visitor behavior is often different between weekdays and weekends.

Atypical traffic: be aware that a marketing campaign running at the same time as your test can send atypical visitors and influence the results. That does not mean you should not do campaigns at the same time as testing; more that you should keep that fact in mind when analyzing the results.

Cost: continuous testing requires you to produce lots of content (such as digital assets, text variants, sometimes layout changes, etc.). Carefully evaluate the expected benefits but also consider the means that are necessary to run a truly successful optimization campaign (meaning multiple or permanent tests). Do not launch yourself into such a project if you do not have the resources to do so.

Remember that optimization testing is not a magic wand and that, sometimes (even often!), the test results can be deceptive simply because visitors do not prefer the variants significantly more or less than your control version. In that case, the test result will not reveal a better option than what is already online. Testing should be a continuous effort to find what works and what does not; you will have to learn iteratively through each success or failure.