Integrating external data sources

The External Data Provider module provides an API that integrates external systems as content providers like the JCR by implementing an External Data Source. The data source implementation just needs to manage the connection to the external system and the data retrieval. The External Data Provider does all the mapping work to make the external content appear in the JCR tree.

All data sources must provide content (reading). They can provide search capabilities and write access to create and update content. They can also be enhanceable, meaning that the ‘raw’ content they provide can be enhanced by Jahia content. For example, being able to add comments to an object provided by an External Data Provider.

When to use an EDP?

External Data Provider is not the simplest pattern to implement in Jahia. To integrate with another solution, most of the time, combining the 3 following patterns will be enough:

- A simple content type definition

- An OSGI service that does an API call

- a JSP taglib

Below is a table summarizing the capabilities of EDP implementation versus a simple component using a traditional Java service. In other words, EDP is typically suitable to aggregate or mount content from other sources (products, assets, files, api).

| Simple Jahia components that use a Java service that calls an API | EDP implementation | EDP implementation with ElasticSearch Data replication | |

| Complexity to implement | Simple | Medium | High |

| Integration with Search / Make it possible for editors or visitors to search for external content (If searchable interface is implemented) | no | Yes | Yes |

| Integration with permissions | no | Yes | Yes |

| Use existing UIs to browse content (If browsable interface is implemented) | no, custom UIs must be provided | Yes | Yes |

| Allow editors to override properties of external content (TBC - If editable interface is implemented??) | no | Yes | Yes |

| Independent from external API performance & resilience | no | no | Yes |

| Requires data replication | no | no | Yes |

Comparison of EDP implementations

| Supported by Jahia | Basic Readable | Searchable | Browsable | Writeable | Based on replicaton data in ES | |

| The Movie DB Source code |

no | Yes | Yes | Yes | no | no |

| VFS Source code |

Yes | Yes | no | Yes | Yes | no |

| Cloudinary Source code |

Yes | Yes | no | no | no | no |

| Keepeek Source code |

Yes | Yes | no | no | no | no |

| Akeneo | no | Yes | ? | Yes | Yes | Yes |

Things to consider before implementing EDP

EDP's main functionality is the integration of external content within Jahia JCR repository. Thus, you need to define a plan prior to starting that kind of development.

Question to ask before any other considerations

- Do I need to browse external data as a 'Tree like' structure?

- Do I want to provide indexation and search features on my external data?

- Do I need to propagate security constraints over my external content?

- Do I want to integrate read/write elements in my external data?

- Do I want to mix Jahia content with external data?

If you answered yes to one or more of the above questions, implementation of an EDP is probably the right choice.

Elements to consider for your implementation

- Reference your external data model and define a JCR mapping for each external entity

- Define how you are going to map your entities in a Tree structure

- You must be able to generate a path to an entity without any context (not knowing the parent) from its unique ID

- If you map multiple entities in your provider, you must be able to retrieve an entity from its id (maybe using suffix or any specific pattern to dissociate ids from another)

- Ensure that each entity has one and only one id (you can't map the same entity id on many path)

- Think about failover and a caching strategy to ensure bests performances when accessing your external data

- Avoid creating a tree mapping that could lead to more than 500 children for a node

- Ensure that your external data source can be accessed at any time without failures or adopt a failover strategy

- Use the Jahia internal cache if needed to store your entities when access time can include network latency

- Do not hesitate to use a specific Data Access Layer in your EDP to decouple tree browsing and data access

- Look at already existing EDP references implementations

- Consider using UUID

- If your external ID are UUIDs you can use them directly avoiding a costly operation in the JCR about external ID to jahia ID mapping.

- Using UUID with multiple entities could introduce complexity when trying to access an entity from its UUID by creating the need of performing a lookup in each entity collection to find the corresponding one.

How it works

Specify your mapping

Your external content must be mapped as nodes inside Jahia so they can be used by Jahia as regular nodes (edit, copy, paste, reference ). This means that your external provider module must provide a definition cnd file for each type of content (entity) that you plan to map into Jahia.

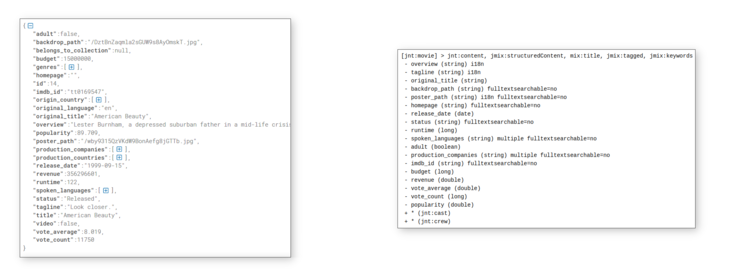

As in The Movie DB sample EDP, you can map a JSON (it could have been also a database table) to a Jahia nodetype, defining each json property as a JCR property.

Then, define a tree structure for your objects. As they will appear in the Jahia repository, you’ll have to pick a parent and children for each entry.

It is very important that each node has a unique path. You must be able to find an object from a path and also know the path from an object. The node returned by a path must always be the same, and not depend on contextual information. If your nodes depend on the context (for example, the current user), you’ll need to have different paths. To correctly create a node hierarchy, it’s perfectly acceptable to add virtual nodes to act as containers for organizing your data.

In The Movie DB sample, some virtual nodes are used to split API into categories : /movies, /persons, /genres. Those first level nodes are mapped using jnt:contentFolder JCR node type. That content does not come from the API but are here for organisational purpose only. Due to the very large amount of films in the DB, /movies node is also split into organisational subnodes to allow for an efficient browsing. Thus, some virtual nodes for 'year' and 'month' are also used to split the content into subtrees which give a movie path like /movies/1999/03/603

As the movie entity contains also credit information, there is also a mapping under the movie node to browse the crew and cast of the movie like /movies/1999/03/603/cast_6384 and /movies/1999/03/603/crew_9342 In that particular case you can notice the use of an id prefix to separate ids of entity crew and entity cast.

In the /persons node, there is not the possibility to list children since ordering people in a subtree does not seem relevant and because there is no need to browse persons. Nevertheless, persons involved in movies can be referenced using their path /persons/{person-id} and they could be accessed and eventually be searched. In that case, only the tree browsing is disabled. In your EDP you can choose to browse some of your entities but let some others with a direct path access only, according to your needs.

Optionally, you can define a unique identifier for every node. The External Data Provider will map this identifier to a JCR compatible UUID if needed, so that it can be used in Jahia as any other node.

Declaring your data source

External data is accessed through a JCR provider declared as an OSGi service, in which you set information such as the provider key, the mount point, and the data source implementation, as shown in this example.

/**

* An external data provider data source implementation to expose TMDB movies as external nodes

*/

@Component(service = { ExternalDataSource.class, TMDBDataSource.class }, immediate = true,

configurationPid = "org.jahia.modules.tmdbprovider")

@Designate(ocd = TMDBDataSourceConfig.class)

public class TMDBDataSource implements ExternalDataSource, ExternalDataSource.LazyProperty, ExternalDataSource.Searchable, ExternalDataSource.CanLoadChildrenInBatch {

private static final Logger LOGGER = LoggerFactory.getLogger(TMDBDataSource.class);

private static final List<String> EXTENDABLE_TYPES = List.of("nt:base");

@Reference

private ExternalContentStoreProviderFactory externalContentStoreProviderFactory;

private ExternalContentStoreProvider externalContentStoreProvider;

@Reference

private TMDBMapperFactory mapperFactory;

public TMDBDataSource() {

}

@Activate

public void start(TMDBDataSourceConfig config) throws RepositoryException {

LOGGER.info("Starting TMDBDataSource...");

externalContentStoreProvider = externalContentStoreProviderFactory.newProvider();

externalContentStoreProvider.setDataSource(this);

externalContentStoreProvider.setExtendableTypes(EXTENDABLE_TYPES);

externalContentStoreProvider.setMountPoint(config.mountPoint());

externalContentStoreProvider.setKey("TMDBProvider");

try {

externalContentStoreProvider.start();

} catch (JahiaInitializationException e) {

throw new RepositoryException("Error initializing TMDB Provider", e);

}

LOGGER.info("TMDBDataSource started");

}

@Deactivate

public void stop() {

LOGGER.info("Stopping TMDBDataSource...");

if (externalContentStoreProvider != null) {

externalContentStoreProvider.stop();

}

LOGGER.info("TMDBDataSource stopped");

}

(...)

As you can see in that code sample, some specific interfaces are implemented in the DataSource provider and the registration is done during the OSGI Component lifecycle.

According to your level of integration needs you can implement all or some of the EDP interfaces as described in the following sections.

Implementation

Providing and reading content

The main point for defining a new provider is to implement the ExternalDataSource interface provided by the external-provider module (org.jahia.modules.external.ExternalDataSource). This interface requires you to implement the following methods so that you can mount and browse your data as if they were part of the Jahia content tree.

getItemByPathgetChildrengetItemByIdentifieritemExistsgetSupportedNodeTypesisSupportsUuidisSupportsHierarchicalIdentifiers

The first method, getItemByPath(), method is the entry point for the external data. It must return an ExternalData node for all valid paths, including the root path (/). ExternalData is a simple Java object that represents an entry of your external data. It contains the id, path, node types and properties encoded as string (or Binary objects for binaries properties).

Be aware that the root node will be loaded as soon as the EDP starts to test connectivity.

The getChildren method also needs to be implemented for all valid paths. It must return the names of all subnodes, as you want them to appear in the Jahia repository. For example, if you map a table or the result of a SQL query then this is the method that will return all the results. Note that it is not required that all valid nodes are listed here. If they don’t appear here, you won’t see them in the repository tree, but you still can access them directly by path or through a search. This is especially useful if you have thousands of nodes at the same level.

These two methods reflect the hierarchy you will give to Jahia.

The getItemByIdentifier() method returns the same ExternalData node, but based on the internal identifier you want to use. You also have to give the unique path that belongs to that identifier in your tree modelization.

The getSupportedNodeTypes() method simply returns the list of node types that your data source may contain.

isSupportsUuid() tells the External Data Provider that your external data has identifiers in the UUID format. This prevents Jahia from creating its own identifiers and maintains a mapping between its UUIDs and your identifiers. In most cases, it returns false.

isSupportsHierarchicalIdentifiers() specified whether your identifier actually looks like the path of the node, and allows the provider to optimize some operation like the move, where your identifier will be “updated”. This is for example useful in a filesystem provider, if you want to use the path of the file as its identifier.

itemExists() simply tests if the item at the given path exists.

Identifier Mapping

Every time Jahia reads an external node for the first time, Jahia generates a unique identifier for it inside Jahia. Those mapped identifiers are stored inside a table called jahia_external_mapping. This table maps the internal ID to a pair of provider keys and the external ID returned by ExternalData.getIdentifier method.

During browsing the tree for the first time, that generation will occur for each node that is listed and may cause some performance issues when many nodes are browsed. There are different optimizations to bypass that bottleneck like using UUID or limiting the number of children for a node or at a specific tree level.

ExternalData

The External Data Source is responsible for mapping its data content into the ExternalData object. ExternalData provides access to the properties of your content. Those properties must be converted to one of two types: String or Binary. Strings can be internationalized or not, as they are declared in the cnd file.

In The Movie DB sample EDP, some dedicated classes are designed to manage that mapping and inserting the result in a cache. A specific ProviderData object is used to delegate path generation to another component. Using the same pattern you can decouple your external data collections and the tree mapping focusing on data mapping and caching strategy. The resulting solution may also ease the testability of your provider.

Lazy Loading

If your External provider is accessing expansive data (performance or memory-wise) to read, then you can implement the ExternalDataSource.LazyProperty interface and fill the lazyProperties, lazyI18nProperties, and lazyBinaryProperties sets inside ExternalData. If someone tries to get a property not on the properties map in ExternalData, but which is in one of those sets, the system will call one of these methods to get these values:

getBinaryPropertyValuesgetI18nPropertyValuesgetPropertyValues

For example, the ModuleDataSource retrieves the source code as LazyProperties so that the source code is read from the disk only when displayed, not when you display the file inside the tree for exploration. You must decide which type of loading you want to implement. For example, on a DB it must be more interesting to read all the data at once (if not binaries ) depending on the number of rows and columns.

Searching Content

Basic implementation

This capability requires you to implement ExternalDataSource.Searchable interface which defines only one method:

search(ExternalQuery query)

Where query is an ExternalQuery. For more information see, http://svn.apache.org/repos/asf/jackrabbit/site/tags/pre-markdown/content/api/1.4/index.html?org/apache/jackrabbit/core/query/RelationQueryNode.html.

Your method should be able to handle a list of constraints from the query (such asAND, OR, NOT, DESCENDANTNODE, and CHILDNODE.) You do not have to handle everything if it does not make sense in your case.

The QueryHelper class provides some helpful methods to parse the constraints:

getNodeTypegetRootPathincludeSubChildgetSimpleAndConstraintsgetSimpleOrConstraints

The getSimpleAndConstraints method returns a map of the properties and their expected values from the AND constraints in the query.

The getSimpleOrConstraints method returns a map of the properties and their expected values from the OR constraints in the query

With these constraints, you build a query that means something for your external provider, for example, if it is an SQL DB, map those constraints as ‘AND’ constraint in the WHERE clause of your request). Queries are expressed using the JCR SQL-2 language definition.

Offset and Limit support

The external data queries support offset and limit query parameters. In case of multiple providers, the results are returned querying each provider in no specific order, but it will always use the same order after the provider is mounted. This means that on a same query, limit and offset can be used to paginate the results.

Count

You can provide your own count capability by implementing ExternalDataSource.SupportCount and the following method:

count(ExternalQuery query)

This should return the number of results for the provided query. The query can be parsed the same way as the query method. If you have one or multiple providers, count() always returns one row containing the number rows matching the query.

Enhancing and merging external content with Jahia content

Jahia allows you to extend your external data content with some of its own mixins or to override some properties of your nodes from Jahia. This allows you to mix data in your definition, for example, external data and data defined in Jahia. In your OSGi service activation method, you can declare which of your nodes are extensible by additional mixin and properties, and which properties from your definition can be overridden or merged. This example specifies that all your types are extendable, but you can limit that to only certain nodes by listing their definitions. Any mixin can be added on nodes that are extendable.

provider.setExtendableTypes(EXTENDABLE_TYPES);

This example specifies that all properties from jtestnt:directory can be overridden inside Jahia. The following example specifies that only the firstclass_seats property from the airline definition can be overridden.

provider.setOverridableItems(OVERRIDABLE_ITEMS);

With regular usage, these nodes are only available to users and editors if the external provider is mounted. If you unmount your external provider, that data is only accessible from Jahia tools for administrative purpose. As all content coming from the external provider, these content are not subject to publication. Any extension will be visible in both default and live workspace immediately.

Writing and updating content

The external provider can be writeable. This means that you can create new content or update existing content from Jahia. This requires you to implement the ExternalDataSource.Writable interface which defines 4 methods:

moveorderremoveItemByPathsaveItem

Your provider should at least implement saveItem. saveItem receives ExternalData with all modified properties. Note that if you are using lazy properties, modified properties will be moved from the set of lazy properties to the map of properties. Removed properties will be removed from both properties map and lazy properties set.

If content can be deleted, then you should implement removeItemsByPath.

The other two methods (move and order) are optional behaviors that need to be implemented only if your provider supports them. For example, of the VFSDataSource does not implement order as files are not ordered on a filesystem but moving is implemented.

Here is an example of how to access binary data from ExternalDataSource and save the data in the filesystem using VFS API (example from the VFSDataSource).

public void saveItem(ExternalData data) throws RepositoryException {

try {

ExtendedNodeType nodeType = NodeTypeRegistry.getInstance().getNodeType(data.getType());

if (nodeType.isNodeType(Constants.NT_RESOURCE) && StringUtils.contains(data.getPath(), Constants.JCR_CONTENT)) {

OutputStream outputStream = null;

try {

final Binary[] binaries = data.getBinaryProperties().get(Constants.JCR_DATA);

if (binaries.length > 0) {

outputStream = getFile(data.getPath().substring(0, data.getPath().indexOf(JCR_CONTENT_SUFFIX))).getContent().getOutputStream();

for (Binary binary : binaries) {

InputStream stream = null;

try {

stream = binary.getStream();

IOUtils.copy(stream, outputStream);

} finally {

IOUtils.closeQuietly(stream);

}

}

}

} catch (IOException e) {

throw new PathNotFoundException("I/O on file : " + data.getPath(), e);

} catch (RepositoryException e) {

throw new PathNotFoundException("unable to get outputStream of : " + data.getPath(), e);

} finally {

IOUtils.closeQuietly(outputStream);

}

} else if (nodeType.isNodeType("jnt:folder")) {

try {

getFile(data.getPath()).createFolder();

} catch (FileSystemException e) {

throw new PathNotFoundException(data.getPath(), e);

}

}

} catch (NoSuchNodeTypeException e) {

throw new PathNotFoundException(e);

}

}

Provider factories

You can create a configurable external data source that will be mounted and unmounted on demand by the server administrator. You do so by adding a bean implementing the ProviderFactory interface, which is responsible of mounting the provider.

The factory must be associated with a node type which inherits from jnt:mountPoint and that defines all required properties to correctly initialize the Data Source. Then, the moutProvider method will instantiate the External Data Provider instance based on a prototype, and initialize the Data Source. Here’s the code the definition of a mount point from the VFS Provider:

[jnt:vfsMountPoint] > jnt:mountPoint

- j:rootPath (string) nofulltext

And the associated code using OSGI annotations, which creates the provider by mounting the VFS url passed in j:rootPath:

@Component(service = ProviderFactory.class, immediate = true)

public class VFSProviderFactory implements ProviderFactory {

@Reference

private ExternalContentStoreProviderFactory externalContentStoreProviderFactory;

public void setExternalContentStoreProviderFactory(ExternalContentStoreProviderFactory externalContentStoreProviderFactory) {

this.externalContentStoreProviderFactory = externalContentStoreProviderFactory;

}

/**

* The node type which is supported by this factory

* @return The node type name

*/

@Override

public String getNodeTypeName() {

return "jnt:vfsMountPoint";

}

/**

* Mount the provider in place of the mountPoint node passed in parameter. Use properties of

* the mountPoint node to set parameters in the store provider

*

* @param mountPoint The jnt:mountPoint node

* @return A new provider instance, mounted

* @throws RepositoryException

*/

@Override

public JCRStoreProvider mountProvider(JCRNodeWrapper mountPoint) throws RepositoryException {

ExternalContentStoreProvider provider = externalContentStoreProviderFactory.newProvider();

provider.setKey(mountPoint.getIdentifier());

provider.setMountPoint(mountPoint.getPath());

VFSDataSource dataSource = new VFSDataSource();

dataSource.setRoot(mountPoint.getProperty("j:rootPath").getString());

provider.setDataSource(dataSource);

provider.setDynamicallyMounted(true);

provider.setSessionFactory(JCRSessionFactory.getInstance());

try {

provider.start();

} catch (JahiaInitializationException e) {

throw new RepositoryException(e);

}

return provider;

}

}

Once the provider factory is declared, using external-provier-ui will allows to manage mount points for that type of externa provider.

External data ACL implementation

Since the revision 4.0 of the external provider, we introduced the support of the ACL for the External provider. It can be either provided by the external provider or you can let Jahia completely manage them.

Default behavior

By default, you can use the Jahia ACL directly on external nodes the same way as other nodes. The ACL will be stored as extensions.

ACL or privileges?

Some external sources can provide a way to get all ACL for resources, but others can only provide the allowed operation, or privileges, on it. Depending on both, you can either implement ACL or privileges support for the provider.

ACL from the provider

You can let the provider get the ACL from the external source. To do so, the Datasource has to implement ExternalDataSource.AccessControllable and set an ExternalDataAcl to the DataSource.

ExternalDataAcl

ExternalDataAcl contains a list of roles, granted or denied and associated with a user or group. The provider can provide new roles with custom permissions, as Jahia does not export the roles and has no mean to save modifications on a running server. These roles do not have to be edited in the role manager (in a further version, we will make the role editor read only for such kinds of roles).

First create an ExternalDataAcl.

new ExternalDataAcl()

Then fill it with access control entries:

ExternalDataAcl.addAce(type, principal, roles)

type is one of:

ExternalDataAce.Type.GRANT or ExternalDataAce.Type.DENY

principal is a group or a user, format is : u:userKey or g:groupKey

roles is a list of roles names

Note: The ExternalDataSource.AccessControllable interface has been updated and the method String[] getPrivilegesNames(String username, String path) has been removed.

ExternalData

To support the ACL you have to set the ExternalDataAcl in the ExternalData using the method ExternalData.setExternalDataAcl(ExternalDataAcl acl).

For example:

// acl

ExternalDataAcl userNodeAcl = new ExternalDataAcl();

userNodeAcl.addAce(ExternalDataAce.Type.GRANT, "u:" + user.getUsername(), Collections.singleton("owner"));

userExtrernalData.setExternalDataAcl(userNodeAcl);

Note that ACLs are read only on an external node when they are provided by the DataSource.

Privileges support

If your data source only provides allowed actions for a resource, you have to implement ExternalDataSource.SupportPrivileges on your Datasource. Implement the getPrivilegesNames method that returns for a user and a path, the list of String as Jahia privilege names. A Jahia privileged name is the concatenation of a privilege from javax.jcr.security.Privilege and if necessary the workspace where it applies. They are structured like this:

privilegeName[_(live | default)]

For example:

Privilege.JCR_READ Privilege.JCR_ADD_CHILD_NODES + "_default"

Note that the role tabs in Content Editor or managers are not accurate because they display Jahia inherited roles. However these roles are meaningless for the external source as they are not used . Also, as for ACL implementation, the role tab is read only, so no operation can be done on it.

Disabling

By default, ACL on external content is enabled. To disable it completely you have to set in your instance of ExternalContentStoreProvider the property aclSupport to false. Note that Jahia removes some permissions to have consistent behavior in Content Editor when ACL or the content are not allowed to be edited.

Content Editor

If the external source is not writable/extendable and has no ACL support, the content will not be editable and the menu entry edit will not be available. If the external source do not support ACL but can be overridden or is writable, the roles panel displays as read-only in Content Editor.

Warnings

- When the external data contains ACLs, you cannot update ACL on the corresponding node (the roles tab in Content Editor is in read only)

- If a module defines roles, as they are imported each time the module is deployed. You cannot edit them from the settings panel as you will lose all your changes.

Comparison of data management between JCR, EDP and User Provider

This summary table provides for a list of key data types the differences between their management within the JCR, which is limitless, and the list of available actions and expected results when managed within an External Data or a User Provider connected to Jahia.

| Within the JCR | What you can do with the External Data Provider | What you can do with the Users provider |

|---|---|---|

| Identifier | Can provide its own UUID or let the EDP generate one | Users and groups are identified by their name only. A UUID is generated by EDP for every user, group, and member node. |

| Property types | All types, i18n, multiple supported | No i18n or binary properties. Multiple values supported. |

| Reference properties | Internal or external references supported | No reference properties for users and groups. Group members internally references users and groups from the same provider. |

| Search - JCR-SQL2 queries | QOM model passed to EDP, up to implementation to parse and execute the query as it can ( ISO-37 ). QueryHelper is provided to help parsing of simple query, but does not support all type of constraints (1 type of boolean operators, only = comparison).

Queries results are aggregated sequentially for each provider, so global ordering may not be consistent. |

QOM is interpreted and query is transformed into a simple key-value pair criteria, on users and groups nodes only. Only simple AND or OR search can be done, no combination of both ( fix on and/or selection: QA-9046 ). Complex queries cannot be implemented in users provider. Cannot do query on member nodes. Ordering not supported (MAE-40) |

| Listeners and rules | Not supported yet | |

| Publication | Publication not supported. Content is visible in default and live. This applies on external nodes, but also on extensions. | Same as EDP, but it can be confusing that extensions nodes (content stored under users and groups) do not support publication. |

| ACL and permissions | Can set ACLs on any node as an extension (stored in JR) if the provider does not give its own ACL | User nodes have Extensions node (content added under the users and the groups) can have custom ACLs set by the user |

| Write operations | Can define a writeable provider | Not supported |

Sending events to Jahia

Goals

In some cases, it can be useful to send information to Jahia that an item, mounted in an external provider, has been modified externally. This allows you to execute listeners in Jahia that can trigger indexation and cache flush. This event is sent by the external system to Jahia through a specific REST API.

Listening to events

By default, the event listener won’t receive the events from the API. The listener must implement the ApiEventListener interface to get them as any other event.

The EventWrapper class provides a method “isApiEvent()” that can be used to check if the event is coming from the API or not.

Sending events

The REST entry point in a URL of the form:

http://<server>/<context>/modules/external-provider/events/<provider-key>

To find the <provider-key> you can go to Administration>Server>Modules and Extensions>External providers. Your mount point should display in the table and the first column contains the <provider-key> of your provider.

This URL accepts a JSON formatted input defining the list of events to trigger in Jahia.

Events format

The events are a JSON serialization of javax.jcr.observation.Event (see docs.adobe.com/docs/en/spec/javax.jcr/javadocs/jcr-2.0/javax/jcr/observation/Event.html) and contain the following entries:

{

type: string

path: string

identifier: string

userID: string

info: object

date : string

}

The type of event is one of the value defined in javax.jcr.observation.Event:

- NODE_ADDED

- NODE_REMOVED

- PROPERTY_ADDED

- PROPERTY_REMOVED

- PROPERTY_CHANGED

- NODE_MOVED

If not specified, the event type is “NODE_ADDED” by default.

- Path is mandatory and should point to the node/property on which the event happen. Note that path is the local path in the external system.

- Identifier is not mandatory. It’s the ID as known by the external system.

- UserID is the username of the user who originally triggered the event.

- Info contains optional data related to the event. For the “node moved” event, it contains the source and target of the move.

- Date is the timestamp of the event, in ISO9601 compatible format. If not specified, default value is the current time.

For example:

curl --header "Content-Type: application/json" \

--request POST \

--data '[

{

"path":"/deadpool.jpg",

"userID":"root"

},

{

"type":"NODE_REMOVED",

"path":"/oldDeadpool.jpg",

"userID":"root"

}]' \

http://localhost:8080/modules/external-provider/events/2dbc3549-15ff-4b08-92b9-94fc78beeba1

Passing external data

In addition, the “info” field can also contain an externalData object which contains a serialized version of the ExternalData object. This data is loaded into the session so that listeners can have access to the external node without requesting back the data to the provider. This avoids a complete round trip to the external data provider. For example, sending events without data could give this sequence:

If the externalData is provided for both events, this would lead instead to this sequence:

External data format

External data can contain the following entries:

{

"id": string,

"path":string,

"type":string,

"properties": object,

"i18nProperties":object,

"binaryProperties":object,

"mixin": string[]

}

The fields id, path and type are mandatory.

propertiesis an object with the properties name as key and an array of serialized values as value (array of one value for non multi-valued properties)i18nPropertiesis an object with the language as key, and a structure like properties as valuebinaryPropertiesis an object with properties name as key and an array of base64 encoded value

For example:

curl --header "Content-Type: application/json" \

--request POST \

--data '[

{

"path":"/deathstroke.jpg",

"userID":"root",

"info": {

"externalData":{

"id":"/deathstroke.jpg",

"path":"/deathstroke.jpg",

"type":"jnt:file",

"properties": {

"jcr:created": ["2017-10-10T10:50:43.000+02:00"],

"jcr:lastModified": ["2017-10-10T10:50:43.000+02:00"]

},

"i18nProperties":{

"en":{

"jcr:title":["my title"]

},

"fr":{

"jcr:title":["my title"]

}

},

"mixin":["jmix:image"]

}

}

},

{

"path":"/deathstroke.jpg/jcr:content",

"userID":"root",

"info": {

"externalData":{

"id":"/deathstroke.jpg/jcr:content",

"path":"/deathstroke.jpg/jcr:content",

"type":"jnt:resource",

"binaryProperties": {

"j:extractedText":["ZXh0cmFjdCBjb250ZW50IGJ1YmJsZWd1bQ=="]

}

}

}

}

]' \

http://localhost:8080/modules/external-provider/events/2dbc3549-15ff-4b08-92b9-94fc78beeba1

REST API Security

By default the REST API is not allowed, so any request will be denied. You must provide an API key. An apiKey is not generated automatically, you have to do it manually and configure it inside a new config file available in /digital-factory-data/karaf/etc which is named org.jahia.modules.api.external_provider.event.cfg.

Key declaration

<name>.event.api.key=<apiKey>

- <apiKey>

the apiKey - <name>

the name you want, it's just used to group the other config options for the same key

For example:

global.event.api.key=42267ebc-f8d0-4f4d-ac98-21fb8eeda653

Restrict apiKey to some providers

By default an apiKey is used to protect all the providers, but you can restrict the providers allowed by an apiKey.

<name>.event.api.providers=<providerKeys>

- <apiKey>

the apiKey - <name>

the name used to declare the key - <providerKeys>

comma separated list of providerKeys

For example:

providers.event.api.key=42267ebc-f8d0-4f4d-ac98-21fb8eeda653

providers.event.api.providers=provider1,provider2,provider3