Upgrading to jCustomer 2.x & jExperience 3.x

The goal of this document is to detail the process necessary for migrating from jCustomer 1x to jCustomer 2x. Before starting the migration, it is important to read through the entirety of this document and to understand the multiple operations involved in the upgrade.

jCustomer 2.x does introduce significant (breaking) changes, please:

- Read all of the instructions on this page carefully, before proceeding with the upgrade process

- Upgrade jCustomer to 1.5.7+ before applying these instructions

- Always upgrade towards the latest version of jCustomer 2.x

Overview

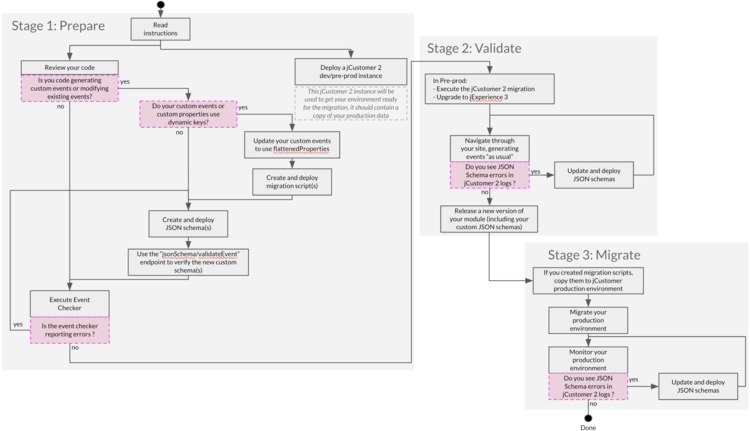

The following diagram provides an overview of the key migration steps.

Stage 1: Prepare the migration

Before starting the data migration, there are some steps required to perform a successful migration.

During this stage, you will be working with a development (or pre-prod) instance of jCustomer containing a copy of your production data, this is necessary as you will be replaying all of your older events to make sure they are compatible with new JSON Schemas. At the end of this stage, you will have created all JSON Schemas and all migration scripts and will be ready to try out a migration to jCustomer 2x.

jCustomer 2.x brings 2 major changes: validation of events and changes in the data model.

To prepare the migration, you will need to:

- Identify if you are using a custom data model, and if you do, create the corresponding JSON schemas

- Identify if you rely on event properties using dynamic keys and if you do, implement a custom migration script

- Identify if your custom Kibana dashboards are impacted

- Identify if your custom modules rely on jExperience, and if they do, update them to follow best practices

1.1 - Prepare your environment

To complete Stage 1 of the migration, aside from your regular development environment, you will need:

- root access to a jCustomer 1x instance containing a copy of your production data

- root access to a jCustomer 2x instance (freshly started, without data)

- to be capable of running our custom event checker tool

Do not proceed to the next step until the above conditions are met.

1.2 - Using a custom data model?

With the introduction of JSON Schemas in jCustomer 2.x, any events submitted to a jCustomer 2.x environment must comply with a pre-existing JSON Schema to be accepted and processed. Is considered a "custom data model", an event that has either:

- An event type that is not known to jExperience or Apache Unomi. By default, jCustomer and jExperience are provided with all the schemas needed to support their functionalities, but it might be necessary to create additional schemas for any custom events you created. Known event types can be found in jExperience code base and Apache Unomi code base

- An event property that is not known to jExperience or Apache Unomi

Note: Custom data model for profiles and sessions do not require any operation. Only events are concerned as profiles and sessions cannot be created or modified from any public endpoint

The next step consists in verifying if your implementation is using a custom data model, two methods are available to assist you in identifying if this is the case and if you require additional JSON schemas or JSON schema extensions:

- by looking at your code

- by looking at your existing data

1.2.1 - In your code

You should review any added integration code since deploying your jCustomer/jExperience environment. This usually involves checking custom Jahia modules or jCustomer plugins, including any Javascript code that was added to send events to jCustomer.

For example, if you are sending custom events to jCustomer using the wem.js library, you will need to create custom JSON schemas for those events. This is true even if you just added some custom parameters to existing events, such as the page view event.

1.2.2 - In your existing data

Although the migration process (from jCustomer 1.x to jCustomer 2.x) will handle the migration of existing events, this migration itself does not create additional JSON Schemas, and any event received by an environment after its migration will still require a valid JSON Schema to be properly accepted and processed by the jCustomer 2.x server (or else it will be rejected).

To help validate your readiness to migrate to jCustomer 2.x, we built a custom event checker tool that will automatically fetch all of your existing events (in the past 60 days by default), migrate them to jCustomer 2.x data model, and validate them against existing deployed JSON Schemas in jCustomer 2.x. By looking at the generated error messages, you will be able to update your JSON Schemas accordingly and iteratively modify, deploy, and test them until all your events validate properly. It is also possible for you to run the custom event checker and share the output with our support team.

1.3 - Create and deploy JSON Schemas

If you identified that you are currently using a custom data model, you will need to create and deploy JSON Schemas. For the time being, it is easier to work directly towards the fresh jCustomer 2x instance you prepared earlier.

At the end of this step, all events issued by your codebase should have valid JSON Schemas, this means that if you were to go with those in production today, with no consideration for your previous data, you would have all the necessary JSON Schemas needed for operating jCustomer 2x in production.

1.3.1 - Creating and deploying new schemas

Any event submitted to jCustomer 2.x public endpoints will be rejected except if they comply with an existing JSON Schema.

If you are new to JSON Schemas in jCustomer (or Apache Unomi) 2.x, please review the First steps with Apache Unomi. This page contains step-by-step instructions to create your first JSON Schema.Do not go to production until you created all JSON Schemas necessary to handle your events.

Here's an example of what a request to create JSON schema looks like:

curl --location --request POST 'http://localhost:8181/cxs/jsonSchema' \

-u 'karaf:karaf' \

--header 'Content-Type: application/json' \

--data-raw '{

"$id": "https://vendor.test.com/schemas/json/events/dummy/1-0-0",

"$schema": "https://json-schema.org/draft/2019-09/schema",

"self": {

"vendor": "com.vendor.test",

"name": "dummy",

"format": "jsonschema",

"target": "events",

"version": "1-0-0"

},

"title": "DummyEvent",

"type": "object",

"allOf": [

{

"$ref": "https://unomi.apache.org/schemas/json/event/1-0-0"

}

],

"properties": {

"properties": {

"$ref": "https://vendor.test.com/schemas/json/events/dummy/properties/1-0-0"

}

},

"unevaluatedProperties": false

}'

You can also find information on sending events via jExperience's tracker here.

1.3.2 - Testing your events on your schemas

As previously mentioned, it is advised to verify if your previous events have a matching JSON Schema by using jCustomer Custom Event Checker. In general, you should not proceed with the migration if there are rejected events.

You can also verify events individually by submitting a POST request to an admin endpoint dedicated to schema validation: /cxs/jsonSchema/validateEvent, which will provide details about schema errors (if the event does not match a schema). You can find more information about the new event validation endpoint in the Apache Unomi documentation.

1.3.3 - Packaging your json schemas for production

It is possible to package your custom JSON schemas inside a Jahia module, which will then be sent to jCustomer for deployment upon module startup. You can find information on how to do this in our jExperience module packaging documentation.

1.4 - Using dynamic keys for event properties?

If you are using dynamic keys for event properties, you will have to create a migration script to migrate these properties before creating a new JSON Schema. The key of a property is the name of the property. If the name of these properties can be different between several events of the same event type. It's the case for the form event for example.

Example: We can have a form that contains a name and first name, once an event is sent with the data of this form, the name, and the first name will be sent through the event properties. We can have a second form that contains the address, age, and location. So when sending an event with the data of this form, the properties will be address, age, and location. In both cases, the event type will be "form". The migration of form events is already handled by jCustomer, the properties are moved to the new event field name flattenedProperties.

This change has been introduced to avoid mapping explosion in Elasticsearch. The mapping for flattenedProperties is not dynamic so it avoids issues when there are a lot of different event properties keys. The properties field of the events will continue to generate dynamic mappings, so if you want to add data in this field it will require a specific JSON Schema to limit the possible data.

If you have the same kind of event than form, you will have to create a migration script to move the dynamic key properties to the flattenedProperties field. Once the migration is done you could be able to create the JSON schema for your event type.

1.5 - Create migration scripts

As jCustomer 2.x introduces breaking changes in its data model, it includes a set of migration scripts to transform the existing data from jCustomer 1.5.7+ data model to jCustomer 2.x data model.

But since jCustomer can only migrate objects it knows about, some custom events you used might require the creation of migration scripts and injecting those into the migration process.

Before starting its migration, jCustomer will create a list of all of the migration scripts located in its internal codebase and scripts placed by users in the data/migration/scripts directory; it will then sort that list and execute the scripts sequentially. This allows you to specify, by following a naming pattern, in which order your scripts should be executed.

The Apache Unomi 2.x documentation contains a dedicated section to explain how to create additional migrations scripts.

You can learn more about what was changed in Apache Unomi 2 on the official website.

1.5.1 - Implement a custom migration script

If you need to implement your custom migration script, the documentation on the Apache Unomi website is there to help you. Here is an example of a built-in migration Groovy script provided with Apache Unomi from the tools/shell-commands/src/main/resources/META-INF/cxs/migration directory:

MigrationContext context = migrationContext

String esAddress = context.getConfigString("esAddress")

String indexPrefix = context.getConfigString("indexPrefix")

String baseSettings = MigrationUtils.resourceAsString(bundleContext, "requestBody/2.0.0/base_index_mapping.json")

String mapping = MigrationUtils.extractMappingFromBundles(bundleContext, "profile.json")

String newIndexSettings = MigrationUtils.buildIndexCreationRequest(baseSettings, mapping, context, false)

MigrationUtils.reIndex(context.getHttpClient(), bundleContext, esAddress, indexPrefix + "-profile",

newIndexSettings, MigrationUtils.getFileWithoutComments(bundleContext, "requestBody/2.0.0/profile_migrate.painless"), context)

This script references request body files that are available here.

1.6 - Update your custom Kibana dashboards

Because of the change introduced regarding dynamic keys, it is not yet possible to build Kibana dashboards based on:

- URL parameters

- Interests

- Form events specific fields

These fields are now also using flattenedProperties and it is not possible to build visualizations based on these fields. If that causes any issue, please report it to the Jahia support team..

1.7 - Update your configuration & your custom modules to follow best practices

1.7.1 - For any custom modules relying on jExperience

If you have any dependencies on jExperience, you should update them as well when updating jExperience

1.7.2 - New web tracker (wem.js)

As part of jExperience 3.3.0, we released a new version of the web tracker (wem.js).

This new major version of the tracker was an opportunity for some cleanup and removal of functions not used by jExperience.

The following functions were removed from the web tracker:

- loadContent()

- extends()

- _createElementFromHTML()

- _loadScript()

- sendAjaxFormEvent()

The tracker remains extensible and if you still need these functions (or similar functions), you can easily integrate this logic into your own codebase.

1.7.3 - New methods to communicate with jCustomer

Since jExperience 2.8.0, some methods have been added to ease the communication between Jahia modules and jCustomer. If in your modules, there are some calls to jCustomer, you must update these modules to use the methods provided by jExperience.

The new methods are described in the section "Calling jCustomer public endpoint and Calling jCustomer private / admin endpoint" sections of the Using jExperience java services page.

1.7.4 - Use jExperience as a proxy to jCustomer

Since jExperience 2.7.2, it is possible to use jExperience as a proxy to jCustomer. In other words, jCustomer doesn't need to be exposed to internet. This new setting ensures that the calls to context.json and eventcollector are always sent to the same domain as the website; therefore cookies are always set as first party which prevent browsers to block them. This setting is described in Installing Elasticsearch, jCustomer, and jExperience, in the section "Properties to configure in the jExperience settings file" => "jCustomer public URL".

1.8 - Wrapping up stage 1

When reaching this stage, you should have the following:

-

a jCustomer 2x events containing all custom JSON Schemas needed for accepting events generated from now on

- Migration scripts necessary to migrate your old events to the new jCustomer 2x data model

Do not proceed until the above conditions are met.

Stage 2: Validate in pre-prod

3.3 - Wrapping up stage 3

Your production environment should now be fully migrated, as an extra measure of precaution, you could keep monitoring jCustomer logs for schema validation errors during a few weeks.

But remember that JSON schemas are also here to increase the robustness of your platform and aim at rejecting events not conforming to a set schema, if your platform is subject to attacks, these would trigger legitimate schema validation errors.